Bit notations in computing are essential means by which computers encode, process, and store data.

Table of Contents

Introduction

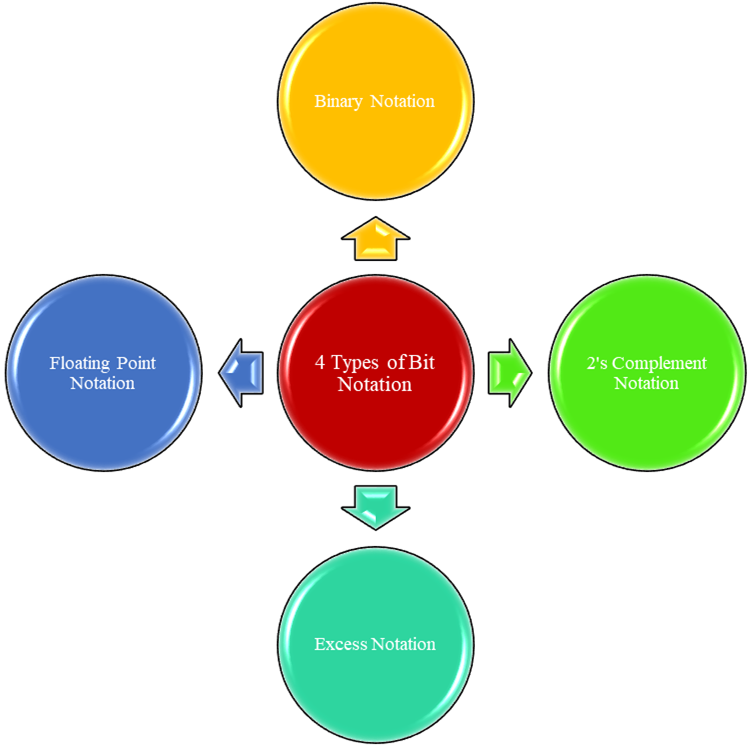

Each type of notation—Binary, 2’s Complement, Excess, and Floating-Point—has specific roles. Each notation fulfills a unique need for digital computations and memory management.

In computing, the decimal numeral system is converted into binary notation via these techniques that has evolved over time.

Note decimal numeral system is also called as the base-ten positional numeral system and denary or decanary system. It is a standard system for denoting integer and non-integer numbers.

Prerequisite for Understanding Bit Notations in Computing

One must have the knowledge of binary number system, bits, conversion of bits from decimal to binary and vice versa.

4 Types of Bit Notations in Computing

Binary Notation

To know more about it click here.

2’s Complement Notation

To know more about it click here.

Excess Notation

To know more about it click here.

Floating-Point Notation

To know more about it click here.

Conclusion

Each bit notation type plays a crucial role in making computer arithmetic accurate and efficient across various applications, from handling simple integers to managing complex calculations in software and hardware alike.

Understanding these encodings gives a glimpse into the intricate workings of digital devices we rely on every day. It can also aid in debugging or optimising codes.

Frequently Asked Question (FAQs)

Why is binary notation important in computers?

Binary notation is fundamental in computing because it uses only two symbols (0 and 1), which map directly onto computer hardware that distinguishes between two states, like high and low voltage. This efficient, stable system underlies all data processing and storage in digital systems.

What is the difference between Binary and 2’s Complement notation?

Binary notation represents positive numbers, while 2’s Complement allows encoding both positive and negative integers. In 2’s Complement, the leftmost bit acts as the sign bit, with 0 representing positive and 1 negative, making it easier to perform arithmetic operations.

How does Excess notation work?

Excess notation, or biased notation, represents signed numbers by adding a fixed bias (like 4 or 8) to the actual value, which helps encode positive and negative numbers without a sign bit. It is often used in hardware applications and floating-point exponent encoding.

What is a radix point in binary?

The radix point in binary, also called a binary point, divides the integer part from the fractional part in a binary number. It operates similarly to a decimal point in base-10 numbers. For example, 10.10 in binary represents the decimal number 2.5.

What is the IEEE 754 standard in floating-point notation?

The IEEE 754 standard is a widely used format for representing floating-point numbers in computers, defining single and double precision types. It divides numbers into three parts: sign, exponent, and mantissa, allowing for precise representation of both very large and very small values.

What are the differences between single and double precision in floating-point representation?

Single precision uses 32 bits with 1 sign bit, 8 bits for the exponent, and 23 bits for the mantissa. Double precision uses 64 bits with 1 sign bit, 11 bits for the exponent, and 52 bits for the mantissa. Double precision offers greater accuracy and range, useful for high-precision applications.

What are the main drawbacks of floating-point notation?

Floating-point notation can introduce rounding errors due to its limited precision, and operations require more processing power than integer arithmetic. Precision is dependent on mantissa length, which can lead to inaccuracies.

Why 4-bit floating-point representation cannot handle large numbers?

A 4-bit floating-point representation lacks the range and precision necessary to represent large or complex numbers, as it does not provide enough bits to accurately represent both the exponent and mantissa.

How do computers use binary notation in everyday applications?

Computers use binary notation for virtually all internal processes, from data storage and processing to software instruction execution. For example, images, text, audio, and video are all converted to binary sequences to be processed by computer systems.